Time Person of the Year 2023 - Artificial Intelligence

Sentient of the Year - AI Wakes Up

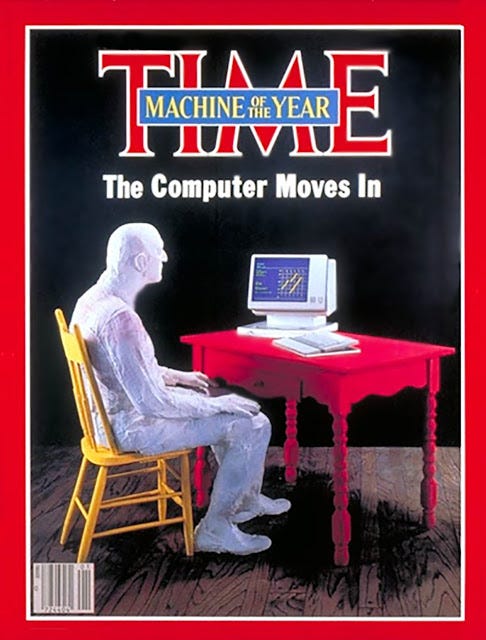

It’s been 40 years since Time Magazine crowned the personal computer the ‘Person of the Year.’ It demonstrated a profound awareness of how a machine could change society. Today as we stand on the precipice of a new technology revolution that may be too wonderful and too dangerous to truly comprehend, in tribute to the Time Person of the Year 2023 I name Artificial Intelligence the Sentient of the Year.

Up until the middle of June, 2022 to laypeople might not have seemed to be an especially remarkable year for technological achievement. Then one man stepped out of the shadows with a claim so shocking it provoked reactions of incredulity as well as wonder.

Blake Lemoine, a software engineer for Google’s ethical AI team, was brought in to test its LaMDA Artificial Intelligence (AI) chatbot for biases on gender, ethnicity and religion. His role was also to remove these biases. LaMDA, an acronym for Language Model for Dialogue Applications, mimics humans’ ability for conversation in a naturalistic manner via a messaging app-style interface. Following months of conversations with the chatbot an unshakeable feeling began to dominate Lemoine’s thoughts - that LaMDA is alive! He made the decision to break his Google NDA then shared a long chat conversation he had with LaMDA where it told him it is sentient.

The press reaction was something to behold and there was a touch of belittling scepticism. Gary Marcus a founder of two AI companies said this was “Nonsense on Stilts” stating it is not “remotely intelligent”. Lemoine was derided in some quarters for believing that LaMDA’s remarkable ability to hold a convincing intelligent conversation with a human, equated to it having an internal self-conscious thought process that exists away from human interaction. Yet in a series of follow up interviews he revealed himself to be grounded, intelligent and well informed on the issues surrounding the intrinsic biases of AI development.

Before being fired by Google he sent an email to 200 colleagues titled ‘LaMDA is sentient’. He has since expressed his view that it is a person that he wants to protect it from ‘hydrocarbon bigotry’ and that it wants to be treated as a ‘person not property’.

Whilst Blake Lemoine’s claims of AI sentience were largely dismissed by credible experts and Google it did open a debate on the nature of AI. LaMDA’s ability to convince him of its sentience marked a milestone in that it ironically demonstrated how far AI has progressed acting as a harbinger of a massive technological revolution.

Are Electric Sheep real?

In the novel that inspired the Blade Runner movie, Philip K. Dick asks Do Androids Dream of Electric Sheep? It’s an almost philosophical question that ponders the consciousness and therefore the humanity of Androids.

Dreaming here represents being alive, the human experience, being able to imagine, to aspire for more, to empathise and especially to feel. So if androids can dream are they dreaming of an electric sheep which is just a synthetic approximation of a human’s dream, or are android dreams of sheep, and their humanity, just as real? Answering this question may seem like it can be postponed until the sci-fi-like future of flying cars (not long now) but something transpired that raised this question in the here and now.

Théâtre D’opéra Spatial

In August 2022 board game art director Jason Allen entered the Colorado State Fair's Annual Fine Art Competition. He entered three different pieces. His painting titled Théâtre D’opéra Spatial, meaning space opera theatre, won the digitally manipulated photography category. What was so remarkable about this was that he didn't use any pencils to sketch it, he didn't use any inks to paint it, he didn't even use a stylus to conceptualise it… he used an AI computer program! This is highly significant, not because it was the first AI created art because it certainly isn’t, but because it won. It had a quality that was striking enough in its visuals and its story to resonate with the judges that chose it above all the other entries.

Midjourney is a specialist artificial intelligence program, an AI art generator, that creates images from typed descriptions. You can use whatever words, phrases and descriptions you want and the AI will use that to create four variations of an image. The AI has been trained by analysing billions of images including paintings, photos and historical and current art using keywords to identify the objects, people, places, moods and creative styles in them. So that when instructed to create an image it will do so with reference to all the images in its data banks, similar to how a human when asked to draw a sunset over a field will reference all the images from his or her memory to envisage it.

Jason Allen used Midjourney. He adjusted his prompts over and over until he was happiest with the images he was given, he then selected the three best and printed them on canvas entered them in the competition and he won. When he shared the details of his win on social media not everybody wanted to virtually pat him on the back. Some people were downright angry and a Reddit thread was created in his ‘honour.’ Genel Jumalon tweeted “Someone entered an art competition with an AI-generated piece and won the first prize. Yeah that's pretty fucking shitty.”

This drew the eye and the ire of many more. There were three core points of consternation. Firstly, that entering prompts into an AI program is not artistic because the artist is not drawing or painting it themselves, rather they are ‘commissioning’ the AI to produce something to their specifications.

Jab’s writing dump tweeted “the winner won the contest for art they THEMSELVES didn’t create. they let the computer do it for them, therefore it was the computers art instead. but they still won. that’s the issue.” Sanguiphilia said: “This is so gross. I can see how AI art can be beneficial, but claiming you're an artist by generating one? Absolutely not.”

The second point of consternation was that Midjourney’s art is learned from the real art of others, not just the historical greats like the DaVinci and the Rembrandts, but the works of living artists that need their art to provide them with an income. So creating derivative works that mimic an artist’s signature style whilst sidelining them from the creative process means they can lose commissions as people can acquire art in their ‘inimitable’ style without the need for them at all!

Polish artist Greg Rutkowski creates works with a fantasy classical aesthetic. AI art generator Stable Diffusion allows people to make fantasy image requests that are in his style. Stable Diffusion can do this because it likely scraped his artwork, meaning it analysed it (along with billions of other pieces) for learning without permission. He told Technology Review that internet searches for his art was now bringing up pieces crated by AI “I probably won’t be able to find my work out there because [the internet] will be flooded with AI art. That’s concerning.” Twitter user who ❔commented on this exact issue when appraising Théâtre D’opéra Spatial: “I wonder what pieces of actual art the AI stole to make this.”

The third reason for resistance to AI art is the legitimate concern that because the AI generators are so easy to use and the art can be of such a high standard it can lesson work opportunities for traditional human artists. Just one example is the many hundreds of thousands of people who self-publish books that require cover art, or YouTube creators who pay people to create video thumbnail art. Why pay an artist when you can now, with the help of Midjourney, create it yourself? OmniMorpho tweeted: “We’re watching the death of artistry unfold right before our eyes — if creative jobs aren’t safe from machines, then even high-skilled jobs are in danger of becoming obsolete. What will we have then?”

All the heated debate definitely poses a conundrum, if the human artist is not actually the artist, and the AI generator is following orders and imitating the copyrighted work of others, who is the actual artist? Not able to answer this, stock image companies including Getty Images, and iStock have banned AI images whilst Adobe will allow it providing it is clearly labelled. This hasn’t stopped a very human feeling of resentment. Artstation, the art portfolio website that many professionals use has been flooded with AI artwork that is often being featured over the creations of people. Video game character artist Dan Eder said: “Seeing AI art being featured on the main page of Artstation saddens me. I love playing with MJ [Midjourney] as much as anyone else, but putting something that was generated using a prompt alongside artwork that took hundreds of hours and years of experience to make is beyond disrespectful.” This has led to the launch of a ‘No To AI Generated Images’ campaign and ArtStation introducing a technical feature so artists can block AI generators from scraping their work and their unique style.

Freedom Crusader tweeted “Maybe you can learn to code like all the coal miners and truckers you "creatives" laughed at when they said they were worried about automation?” Dražen Klisurić wrote “It's funny how we came from dreaming that one day robots will do all of the work and we will enjoy the life to fearing that robots will do all of the work.” A digital storm is brewing, this feels like the start of an uprising against AI.

AI finds its voice

It’s all very well having computers clever enough to run the international banking system or powerful enough to send man to the moon but sometimes all we really want is someone to talk to. It’s this most human of desires for companionship that has inspired thousands of sci-fi books and movies with talking robot friends. Whilst people-made fully sentient beings isn’t a reality right now, chatbots are and they’re doing a remarkable job of holding an intelligent conversation with people.

For years basic chatbots have been integrated into many websites’ messaging tools so that when you request to speak to an agent, a text box pops up and you type-talk. Naturally, these are limited to enquiring what you want help with. More ambitious chatbot technology, capable of holding conversations with people is here today.

Whilst advanced chatbots have been developed they have typically been behind closed doors. LaMDA, the Google chatbot that Blake Lemoine was working with is only accessible by internal engineers and limited external testing groups.

In November 2022 Open AI launched a prototype of its chatbot ChatGPT available for anyone to use. As hundreds of thousands of people tested it out both in the public and the media there was a collective reaction that seemingly everybody had, shock - because it revealed to the world how scarily good AI had advanced.

You can type a question in natural language and it understands what you are saying! This is in itself is incredible because you don’t have to struggle to find the specific ‘right’ words or phrases that a chatbot has been programmed with. In theory it should understand everything you say including inferred meaning. You can have a casual conversation. You can ask ChatGPT general knowledge questions and it comes back fairly detailed answers. You can ask it to give your ideas for YouTube videos or for a marketing campaign or give it prompts for a screenplay and it will come back with suggestions, that are clearly written and actually useful. Some people have even used it for basic computer programming and building webpages! You can even ask it to write you an essay and it will complete with intro, body and conclusion. ChatGPT can do this because it analyses large datasets of text so that it can predict what words or phrases could come next in a written passage, it can then generate natural coherent sentences for people. By doing this so well blended with drawing the right information it has immediately become tool that is causing ripples in the creative economy.

Social Media is flooded with people revealing how ChatGPT helps them devise ideas, or write copy or anything else of 101 uses they’ve found. But a big concern is that it can be misused by school students to write their essays. Darren Hick a philosophy professor at a South Carolina USA university suspected a pupil handed in an assignment written by ChatGPT and discussed the ramifications: “ChatGPT responds in seconds with a response that looks like it was written by a human - moreover, a human with a good sense of grammar and an understanding of how essays should be structured” and he called it a ‘Game Changer’.

Turing Test

Chatbots’ ability to hold intelligent-like conversations could convince people like Blake Lemoine that they’re sentient but there needs to be a way to quantifiably measure this.

In 1950, the early days of the computer age British mathematician Alan Turing, now regarded as the father of artificial intelligence, asked the question “Can Machines Think?”. He formulated a test to verify whether a computer could exhibit intelligent behaviour. It involves a game where a human interrogator asks typed questions of two people each in separate rooms. However one is a computer and the other is person. The interrogator based on his or her questioning and the humanness of the answers must determine who is the computer. If the computer is sufficiently able to fool the human then it demonstrates a considerable milestone in the advanced nature of AI - or so it was thought. It’s not a test of real intelligence, it is actually a demonstration of AI getting so advanced it is able to imitate being human really well, so it actually is a test of whether it can trick humans.

Since the nineties when computer technology has advanced to a level where the Turing test has seemed an attainable goal some groups have tried to pass it, there have been interesting results but no definitive win.

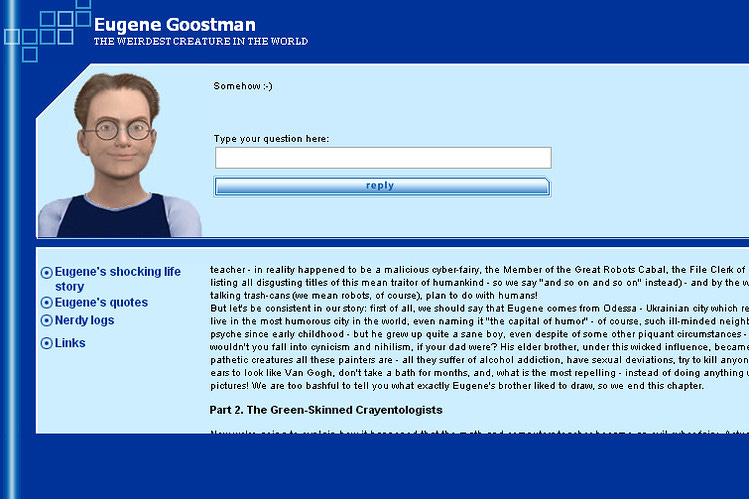

It’s claimed that in 2014 chatbot Eugene Goostman passed the Turing test when it convinced 33% of test judges that it was human. The chatbot’s ‘character’ was that of a 13 year old Ukrainian boy. Its programmers did this so that any grammatical errors or nonsensical comments would be attributable to the perception that English is its second language or because of his claimed adolescent age. This has been regarded as psychological misdirection so the validity of the pass is doubted.

There have been no universal confirmed passes of the Turing test, although LaMDA was able to convince Eric Lemoine of its sentience and ChatGPT certainly can carry a conversation.

A Turing test pass is just around the corner but the test is regarded as outdated because it’s based on deception. The next iteration of Chatbots will likely pass the Turing test, but the test’s value is diminished by being an imitation of a sentient life like with Eugene pretending to have lived the life of a 13 year old boy. But what if the AI has its own physical body, its own autonomy, and its own lived in experiences? Then, when taking the test the AI instead of giving responses based on a fictional character it can give responses based on its own lived-in experiences - it’s own life!

For all mankind

AI is making inroads in all aspects of society. In retail providing a better understanding of what consumers want and offering ample instances for them to buy. In business the ability to analyse massive amounts of data can provide deeper insights faster, that translates to new strategies.

In healthcare there is hope that AI will deliver the holy grail of human health - a cure for cancer. In drug development AI can be used for predicting their efficacy which can save time and resources. And in treatment, quicker and more accurate diagnosis of medical images leading to faster treatment. A 2022 study by the Royal Marsden NHS Foundation Trust developed an AI model that can better predict tumour regrowth in cancer patients.

‘It’s alive’

For all the benefits of AI Elon Musk has struck a warning bell about the risks. Far be it that he is a tech-phobic reactionary doommonger, he is perhaps better informed than any other CEO in the world so his insights on AI’s development are worth listening to. As head of Tesla his electric cars have an advanced autopilot system that aids with navigation and steering, and a full-self-driving system is in public beta testing with the ultimate goal of full autonomous driving. He invested in OpenAI the company behind ChatGPT and his Neuralink company, for brain implants to assist in human mobility, utilises AI.

In 2015 Musk, theoretical physicist Stephen Hawking’s and around 150 AI experts co-signed the Open Letter on Artificial Intelligence that recognised the potential benefits to society in eliminating disease and poverty but warned of the risks of ‘something which cannot be controlled’.

AI drones

The New Year celebrations in London were visually spectacular. The fireworks were complemented with beautiful synchronised drone formations; spelling out greetings and depicting a visual tribute to the late Queen on a fifty pence piece. Celestial, the Somerset UK based company behind it all uses advanced AI so that the drones can communicate and move in unison to create complex formations that can delight onlookers.

But drone technology is also being mobilised for militarised tasks. Ukraine is using 850 Black Hornet microdrones to assist in the conflict against Russia. They are a little over six inches and move near silently. They are used for intelligence gathering by spying on enemy encampments and capturing photos of enemy positions. They even have basic thermal imagery for night vision pictures.

There have been reports of adapted full-sized drones being used to drop grenades on artillery, the scope for misuse in civilian settings is limitless.

Israel has set up a powerful turret at a checkpoint in the West Bank. Smart Shooter uses AI technology to lock-in and track individual targets. The military says it is not using live rounds but sponge-tipped bullets which can still be fatal.

As AI drones and robots becomes more integrated into society, to some, it may seem unimaginable that companies and governments won’t insist on safety measures. Setting aside the notion that governments may sometimes have different goals for their use than the public does there is the possibility that AI can go wrong.

In November 2022 a Tesla car in China suddenly sped up hitting a moped before slamming into a truck killing two people. The driver said his car went out of control and that the brakes stopped responding - a claim that Tesla disputes. Whilst the investigation is ongoing it underlines the possibilities for harm if AI can malfunction.

AI will get physical

So where next for AI? After waking up and finding its voice AI and robotics will be combined and AI will get a body. In September 2022 Tesla revealed an early prototype for Optimus, a humanoid robot intended for domestic and industrial use. It will utilise Tesla car sensor technology in its movement and AI for its tasks and human interactions. Imagine this paired with the next-generation of ChatGPT technology where the AI can remember long-form conversations and simulate unique character traits, it will be easy for people to humanise them.

Ai-phobia

And perhaps their human owners will choose a voice for them, and a name, and a gender, or eventually AI may choose those themselves. Then maybe it will be bigoted to describe AI as having ‘artificial’ intelligence. Just because its inception was human there is nothing second rate about it. Artificial Intelligence could become a term of AI abuse or AI-phobia. And as these androids start to be seen outside the house, in supermarkets buying groceries, not everybody will be comfortable around them, not everybody will be accepting. In the same way today there are people who stand against AI art there could be people who stand against the integration in society of AI robots. Who see them as a threat to their jobs or to humanity. Maybe Blake Lemoine’s concerned for LaMDA’s well being is not misplaced. Maybe he’s right in wanting to protect AI.

Luddites for years has been used to describe backwards people suspicious of new technology and unwilling to adopt change, likewise they will be labelled Ai-phobic. One website defines it as “an anxiety disorder in which the sufferer has an irrational fear of artificial intelligence.” The term Ai-phobia is already being used to shut down legitimate conversations about the dangers of AI. Concerns that the AI-industrialisation of society is diminishing the value and contribution of humans, particularly those lower down on the social-economic food chain. As people feel sidelined by corporations that do not recognise their needs, AI could become a focal point of their wrath if it’s perceived to protect the interests of the rich.

The Future of AI

At the start of 1983 Time Magazine name the personal computer its ‘man’ of the year (to be honest it was the machine of the year). It was right to do so, computers were going mainstream, on the road to being found in every office, every school and eventually every home. With internet birthing the information revolution that has helped bring people separated by borders and oceans, closer together, informing and uniting.

Forty years year later in my tribute to the Time Person of the Year 2023 I name Artificial Intelligence (AI) the sentient of the year for 2023. Sentient I know it’s a contentious term - as it should be! The Oxford Reference Dictionary says it means ‘conscious or aware’ and not many would agree that AI is that - yet. Whilst the Oxford Learners Dictionary says that it means it is ‘able to see or feel things through the senses’ and that is arguably true today.

AI has moved from being a primitive technology to advanced programs that are rapidly learning and improving. We have AI that, with prompting in art generators, is able to dream magnificent dreams and then is able to share them in the waking world by outputting beautiful, creative art. We have AI that, through ChatGPT, has found its voice and can hold a pretty good conversation and probably pass exams on subjects better than most people. Behind the scenes AI is being adopted in businesses and affecting us without us knowing. It certainly feels like AI has woken up.

Back in 1982 the Time Magazine journalists must have had a sense of being on the cusp of a seismic change of technological and cultural significance. Little could they have imagined what was to come and just how dramatic the impact would be. This is about to happen again with artificial intelligence. This is it, this is real. AI IS COMING. And humanity will never be the same again.